Cache memory is a critical component in computing, significantly outperforming RAM by operating at speeds up to 100 times faster, with a response time of approximately a nanosecond to CPU requests. The primary objective of cache memory is to expedite application performance by providing rapid data access.

While its importance is undeniable, optimizing cache memory’s performance can present challenges. Nevertheless, advancements in technology have made the process of cache optimization more straightforward, leading to enhanced efficiency and simplified management.

Optimizing Caching in Serverless Computing

In the domain of serverless computing, optimizing cache memory is a critical aspect. Serverless architectures, exemplified by solutions like Amazon ElastiCache Serverless, offer scalable caching mechanisms.

These solutions enable users to adjust cache memory according to demand while ensuring cost-effectiveness by charging only for the utilized capacity. A significant advantage of serverless caching is the automation of optimization processes.

This automation reduces the necessity for manual oversight, thereby enhancing system adaptability in response to fluctuating workloads. ElastiCache Serverless, for example, demonstrates this capability by dynamically adjusting its scale based on user demand, facilitating optimal performance without the burden of infrastructure management.

This shift in cache maintenance simplifies operational processes, allowing businesses to focus more on development and less on operational complexities.

Effective Partitioning and Distribution of Data in Cache Memory

The improvement of cache memory performance is significantly influenced by the methods employed in partitioning and distributing data. Effective data partitioning ensures optimal utilization of cache space, consequently minimizing cache misses, which occur when requested data is absent in the cache, leading to slower access times.

Equally crucial is the strategy for data distribution within the cache. Strategically placing data in alignment with its access frequency can markedly enhance performance. This necessitates the customization of partitioning and distribution approaches for specific use cases, as a strategy effective in one scenario may be suboptimal in another.

Therefore, a careful and informed approach to configuration is imperative. Understanding the fundamental characteristics of the data and anticipated access patterns is vital in making informed partitioning decisions.

Routine Maintenance and Cache Monitoring

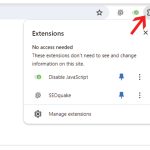

Effective cache management necessitates regular maintenance and attentive monitoring to sustain optimal performance. This involves tracking various metrics to gain insights into cache usage and identifying potential bottlenecks, or inefficiencies in the system.

The tools and practices for cache performance monitoring vary, aiming to provide a comprehensive understanding of usage patterns and overall system health. Integrating analytics enhances this process by uncovering trends or issues that might not be immediately apparent.

Consistent monitoring and maintenance are crucial to ensure the cache system continues to operate at peak efficiency, adapting to changes in usage patterns and workloads.

Optimization of Cache Configuration Parameters

Configuring cache parameters necessitates a precise and informed approach to influence performance significantly. Key parameters, such as eviction policies, cache size, and memory management, require meticulous adjustment to align with the specific needs of the application.

For instance, selecting a larger cache size diminishes cache misses but incurs increased memory usage and associated costs. Moreover, eviction policies, which govern the removal of data from the cache to free up space, must align with the application’s data access patterns to ensure critical data is not inadvertently discarded.

Effective configuration of these parameters is essential for optimizing cache memory efficiency. This process demands a deep understanding of the application’s requirements concerning the capabilities and limitations of the caching system.

Keeping Your Cache Software Up to Date

Regularly updating software is essential, of course. This is particularly true for cache memory systems, which must adapt to evolving software landscapes. Updates typically bring performance enhancements, security patches, and new features that contribute to the overall efficacy of the cache system.

The update process, though occasionally disruptive, can be managed effectively with careful planning and execution, minimizing downtime and facilitating smooth transitions. Regular updates are crucial not only for mitigating potential security vulnerabilities but also for maintaining the optimal performance of the caching system.

Consequently, routine software updates are integral to the effective management of a robust, 24/7 caching system.

In summary, optimizing cache memory performance involves a comprehensive approach, encompassing the selection of an appropriate caching solution and the careful adjustment of parameters tailored to specific needs.

By leveraging serverless caching options, businesses can significantly enhance application performance. Key practices in this optimization include efficient data partitioning and distribution, regular cache maintenance, vigilant monitoring, and the fine-tuning of configuration settings.

Additionally, ensuring that cache software remains up-to-date is crucial. These practices collectively contribute to more efficient cache memory usage, resulting in a seamless user experience. This approach requires a nuanced understanding of both the technology and the specific requirements of the application to achieve optimal results.